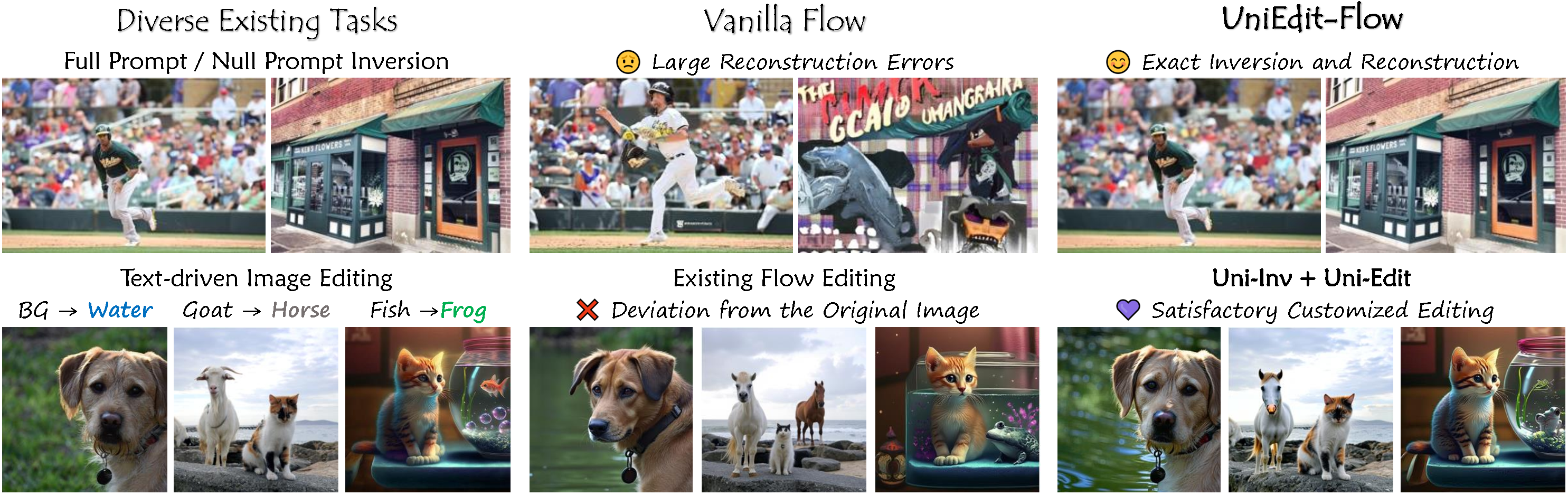

UniEdit-Flow for image inversion and editing. Our approach proposes a highly accurate and efficient, model-agnostic, training and tuning-free sampling strategy for flow models to tackle image inversion and editing problems. Cluttered scenes are difficult for inversion and reconstruction, leading to failure results on various methods. Our Uni-Inv achieves exact reconstruction even in such complex situations (1st line). Furthermore, existing flow editing always maintain undesirable affects, out region-aware sampling-based Uni-Edit showcases excellent performance for both editing and background preservation (2nd line).

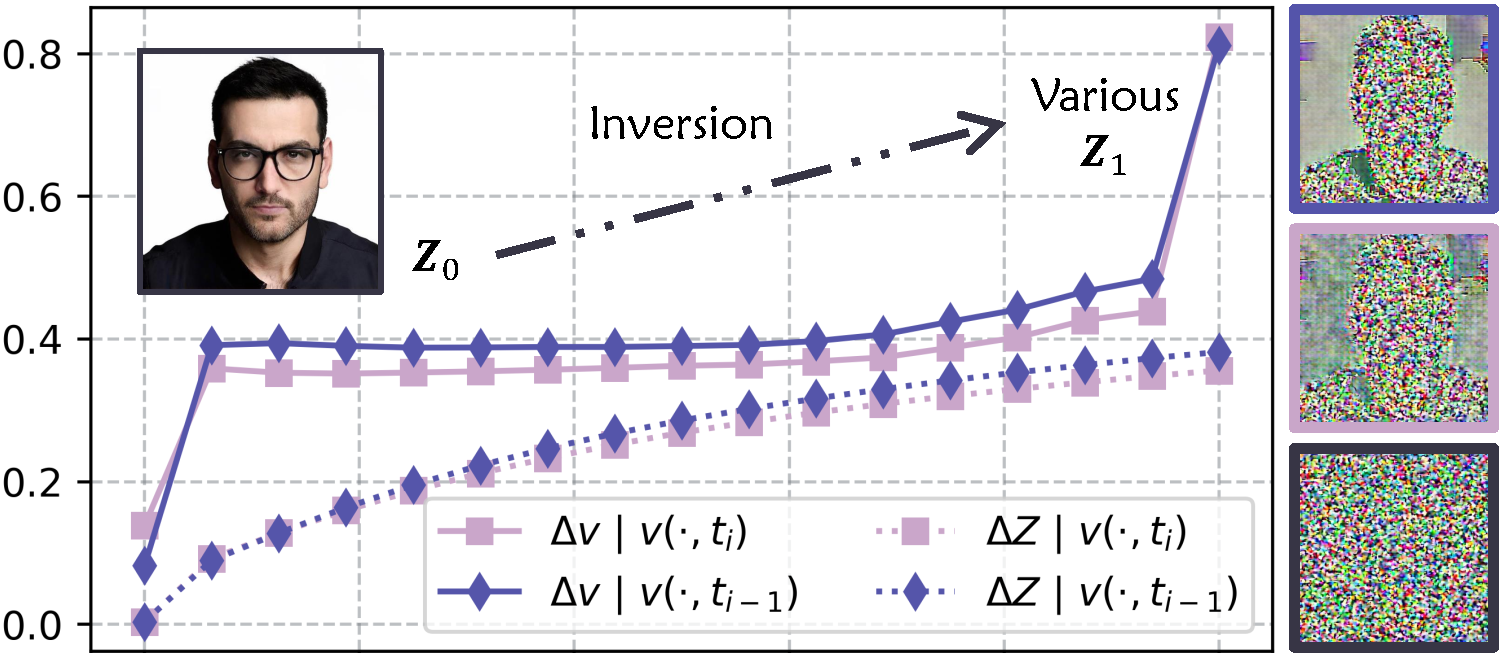

Per-step error of the velocities and samples of vanilla inversions.

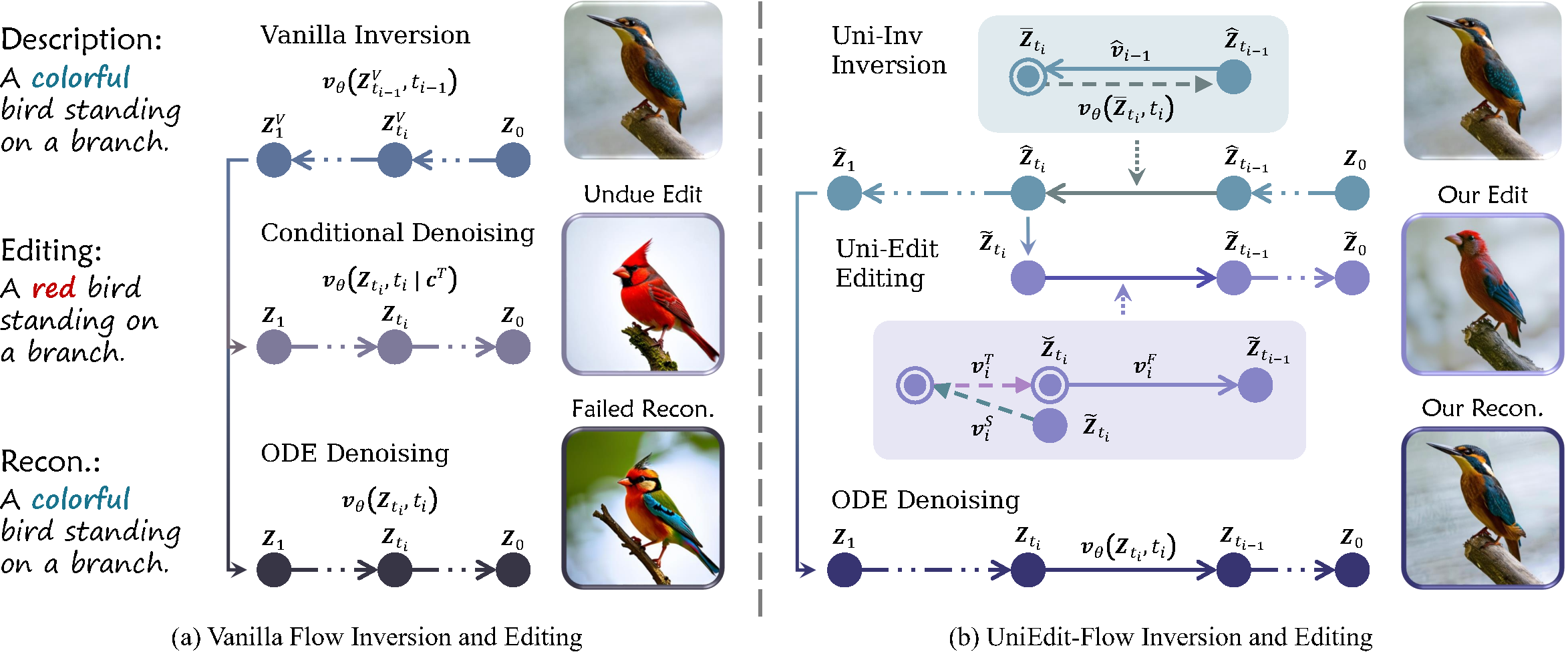

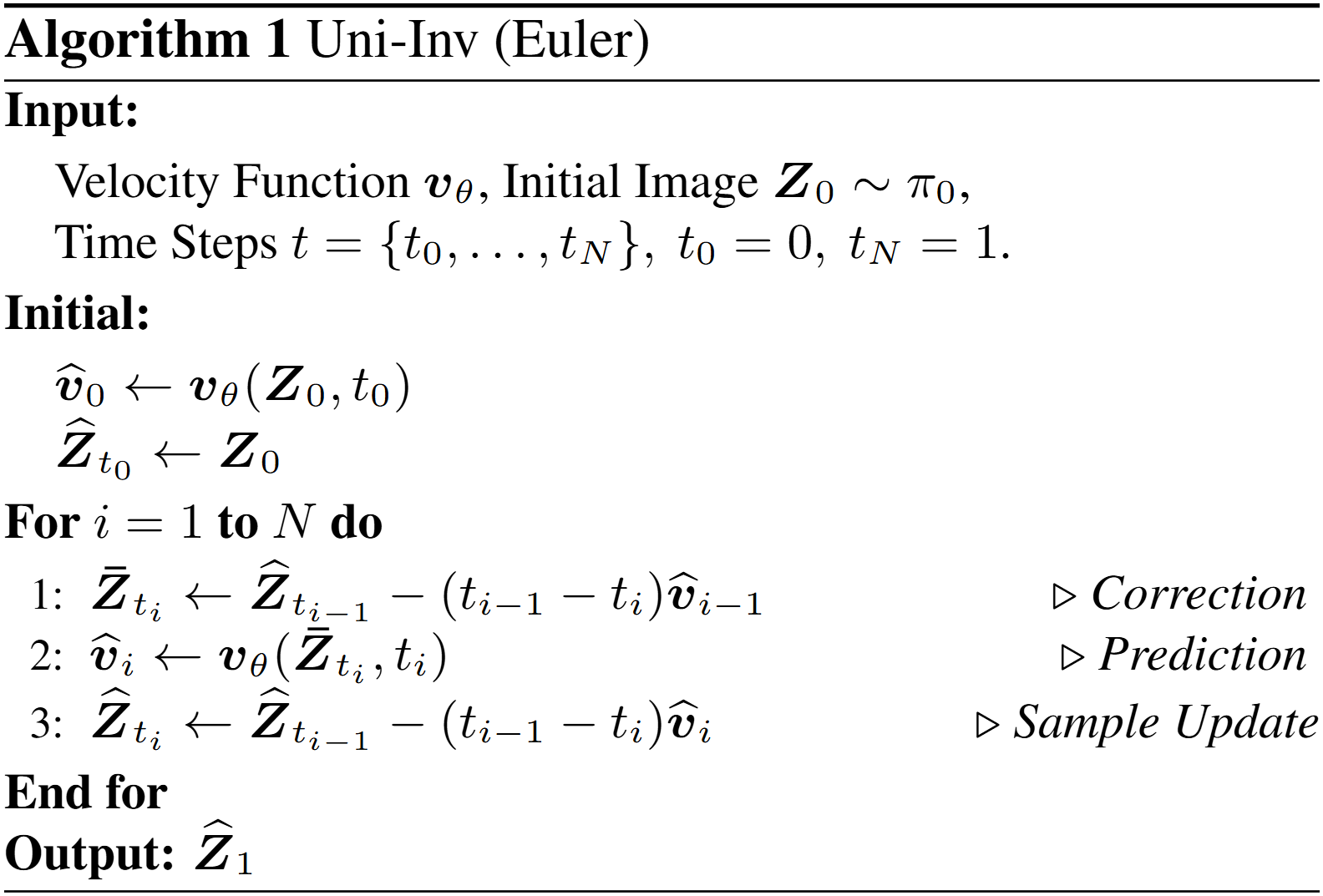

We first synthesis an image \(\boldsymbol{Z}_0\), then conduct vanilla inversion to get inverted noises \(\boldsymbol{Z}_1\) with per-step velocity of \(\boldsymbol{v}_\theta(\boldsymbol{\widehat{Z}}_{t_{i-1}}, t_{i-1})\) (

Per-step error of the velocities and samples of vanilla inversions.

We first synthesis an image \(\boldsymbol{Z}_0\), then conduct vanilla inversion to get inverted noises \(\boldsymbol{Z}_1\) with per-step velocity of \(\boldsymbol{v}_\theta(\boldsymbol{\widehat{Z}}_{t_{i-1}}, t_{i-1})\) (

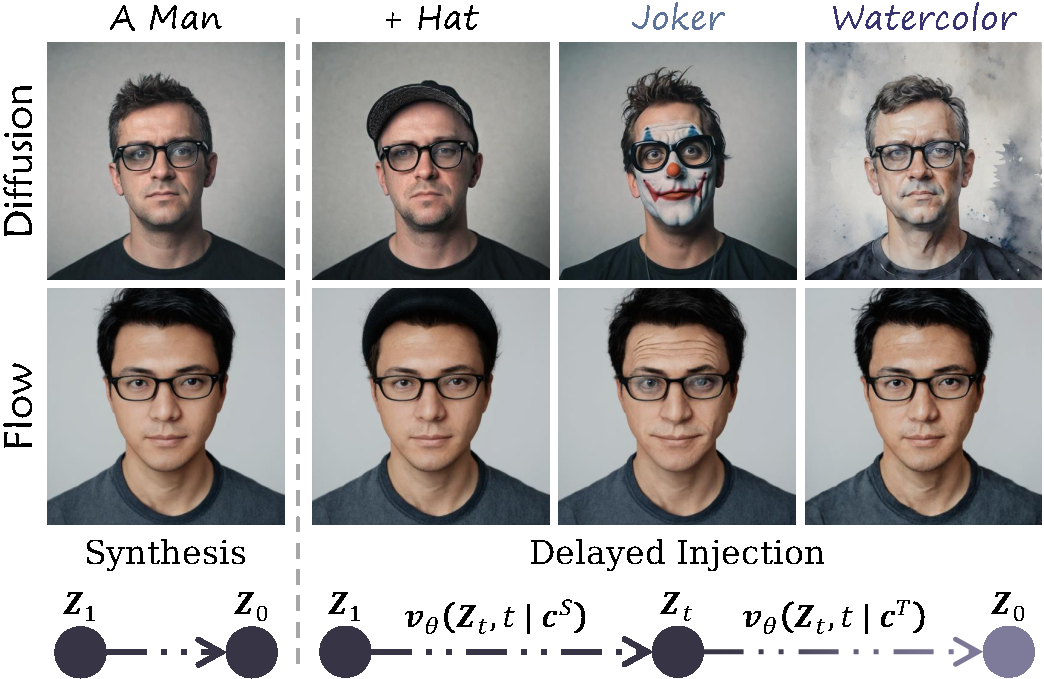

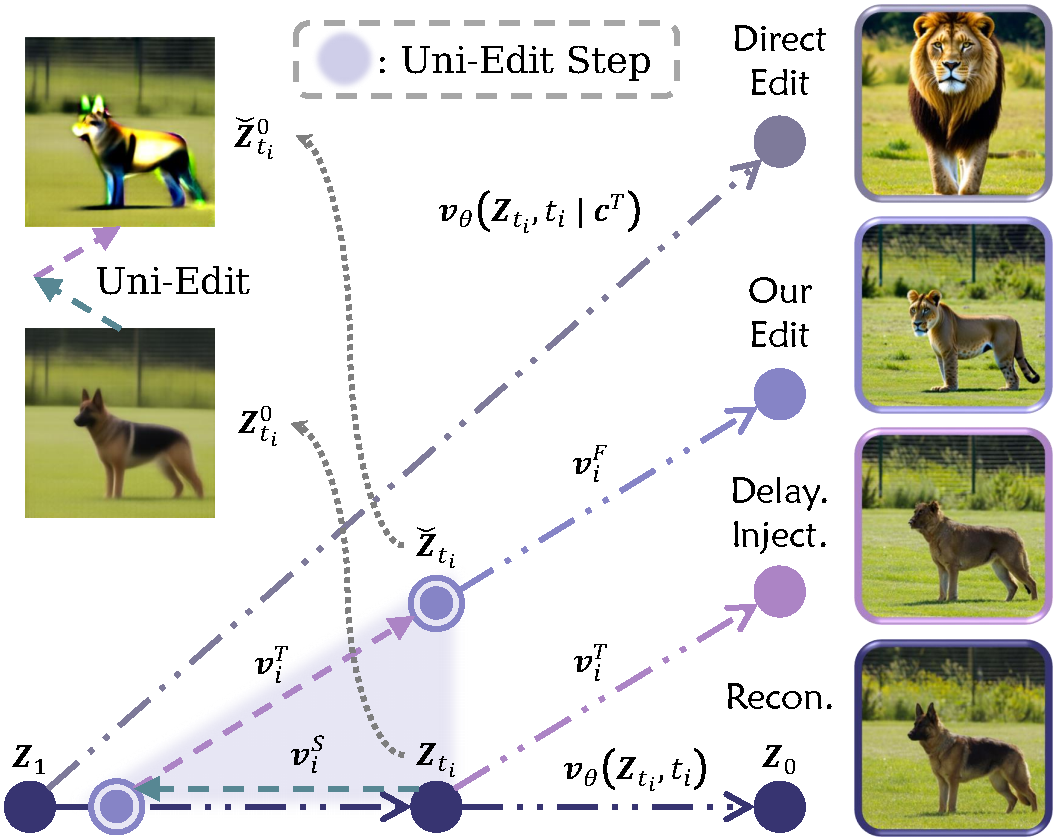

Demonstration of various sampling-based image editing methods (dog \(\xrightarrow{}\) lion).

Directly utilizing \(\boldsymbol{c}^T\) as condition leads to an undue editing.

Leveraging delayed injection, which is widely used in diffusion-based methods, inevitably results in an inchoate performance when using deterministic models.

Our Uni-Edit mitigates early steps obtained components that are not conducive to editing, ultimately achieving satisfying results.

Demonstration of various sampling-based image editing methods (dog \(\xrightarrow{}\) lion).

Directly utilizing \(\boldsymbol{c}^T\) as condition leads to an undue editing.

Leveraging delayed injection, which is widely used in diffusion-based methods, inevitably results in an inchoate performance when using deterministic models.

Our Uni-Edit mitigates early steps obtained components that are not conducive to editing, ultimately achieving satisfying results.

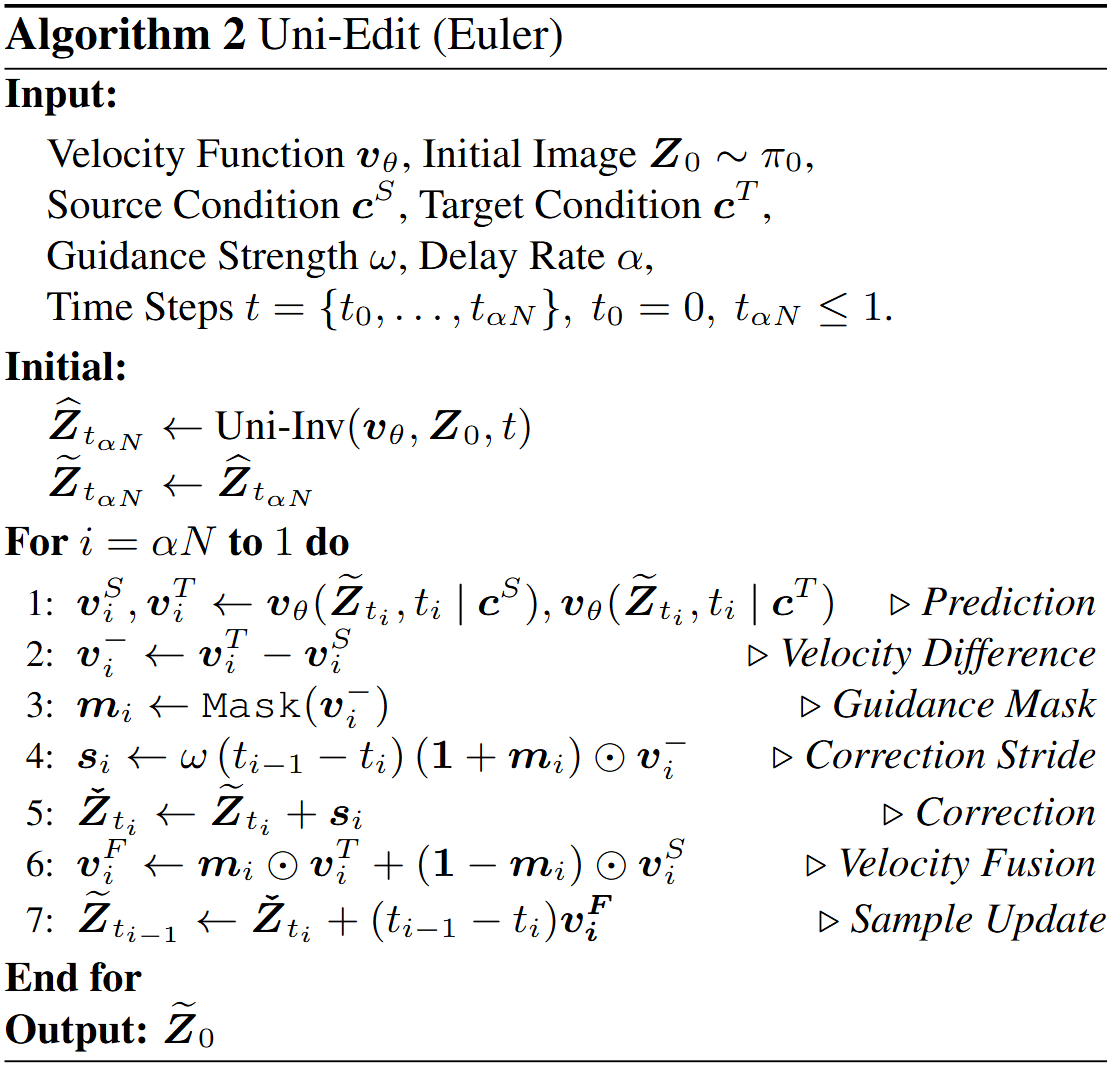

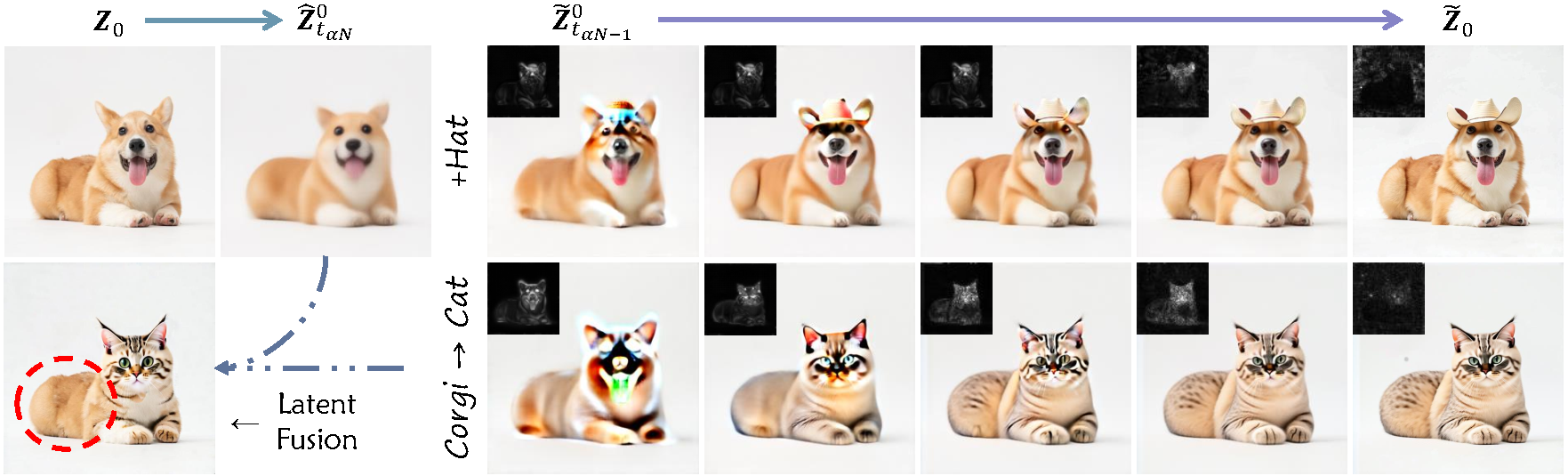

Visualization of Uni-Edit process.

The guidance mask of each denoising step is shown at the upper right of the image.

We also demonstrate the "Sphinx" phenomenon that existing latent fusion approaches may cause at the lower left of the figure.

Visualization of Uni-Edit process.

The guidance mask of each denoising step is shown at the upper right of the image.

We also demonstrate the "Sphinx" phenomenon that existing latent fusion approaches may cause at the lower left of the figure.