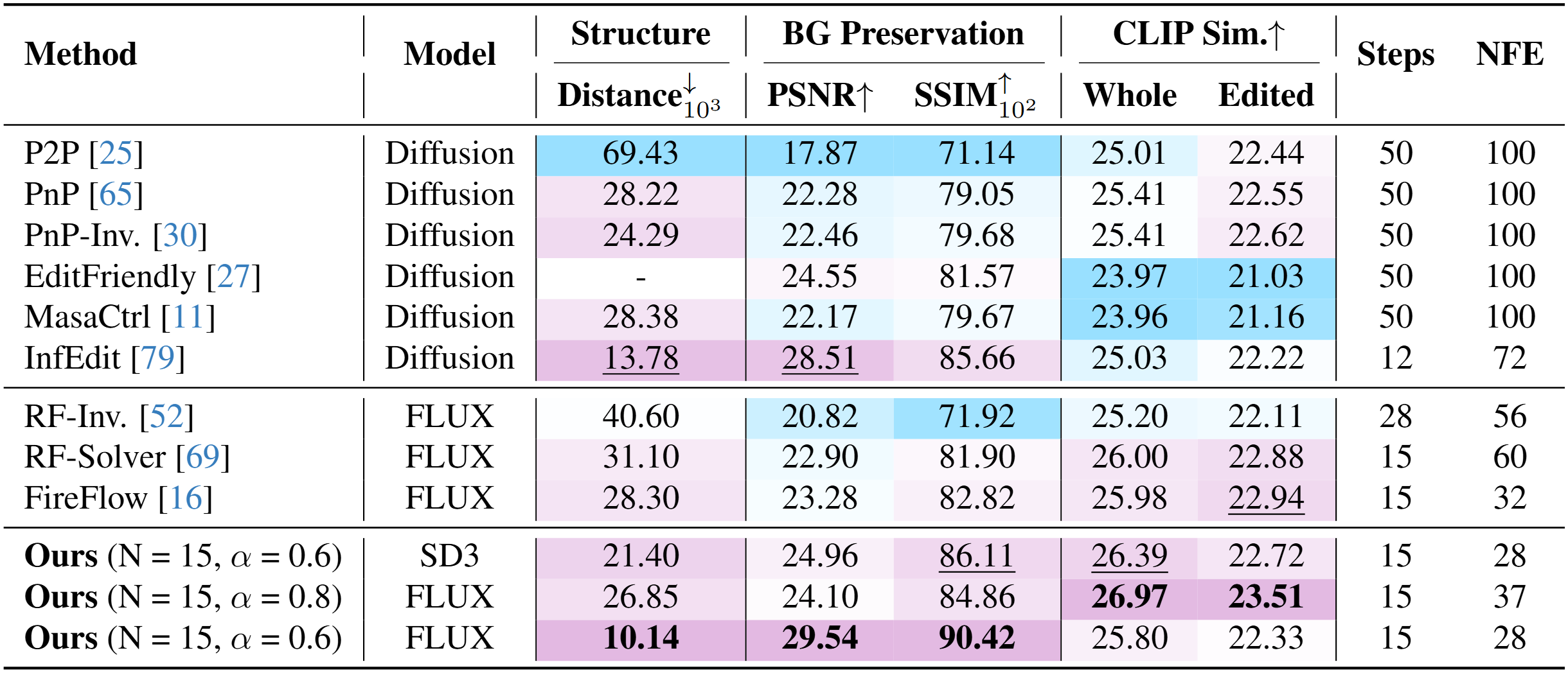

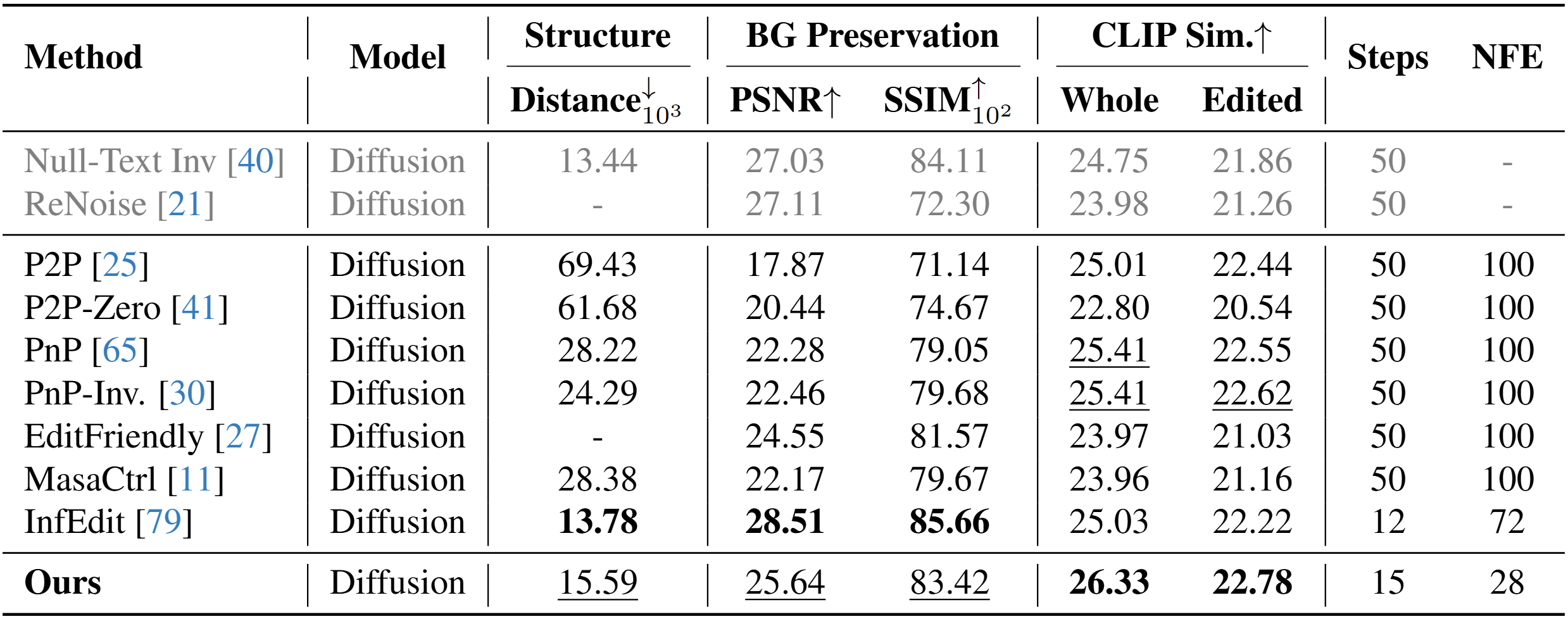

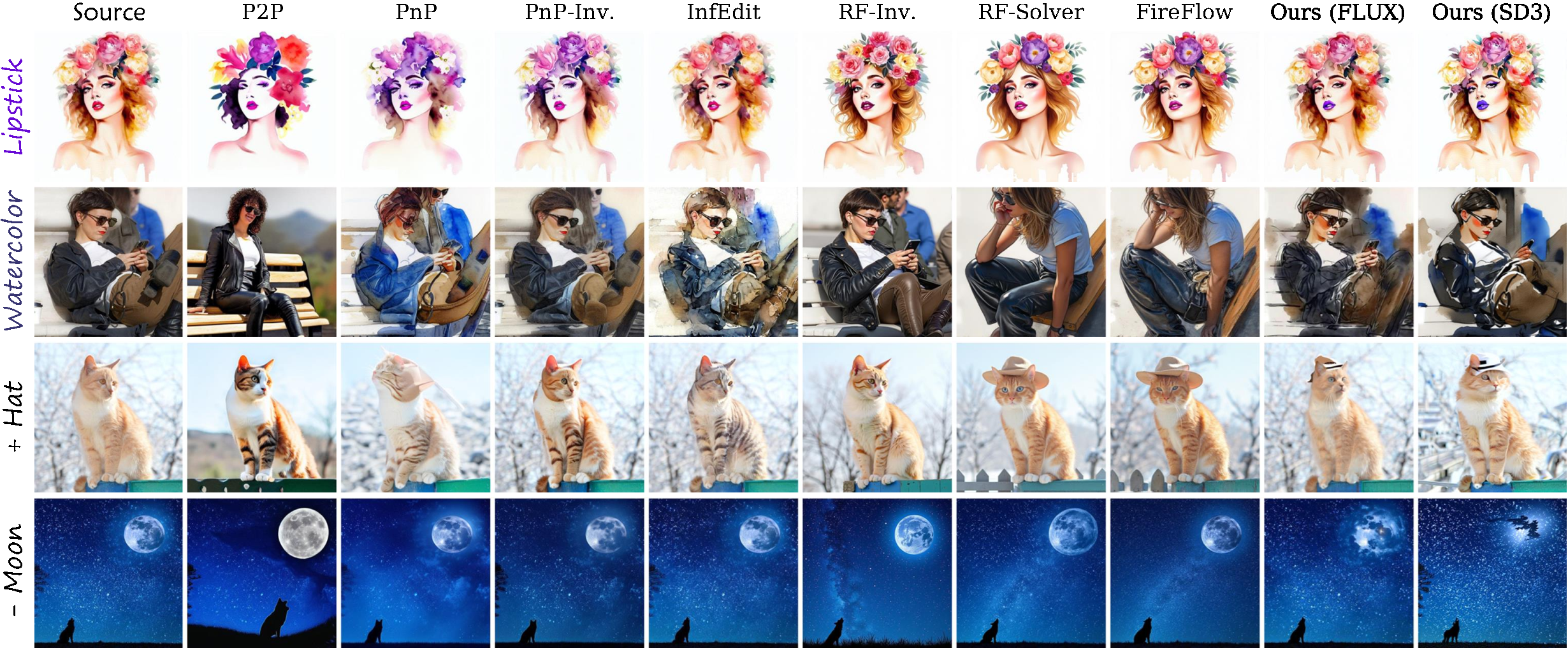

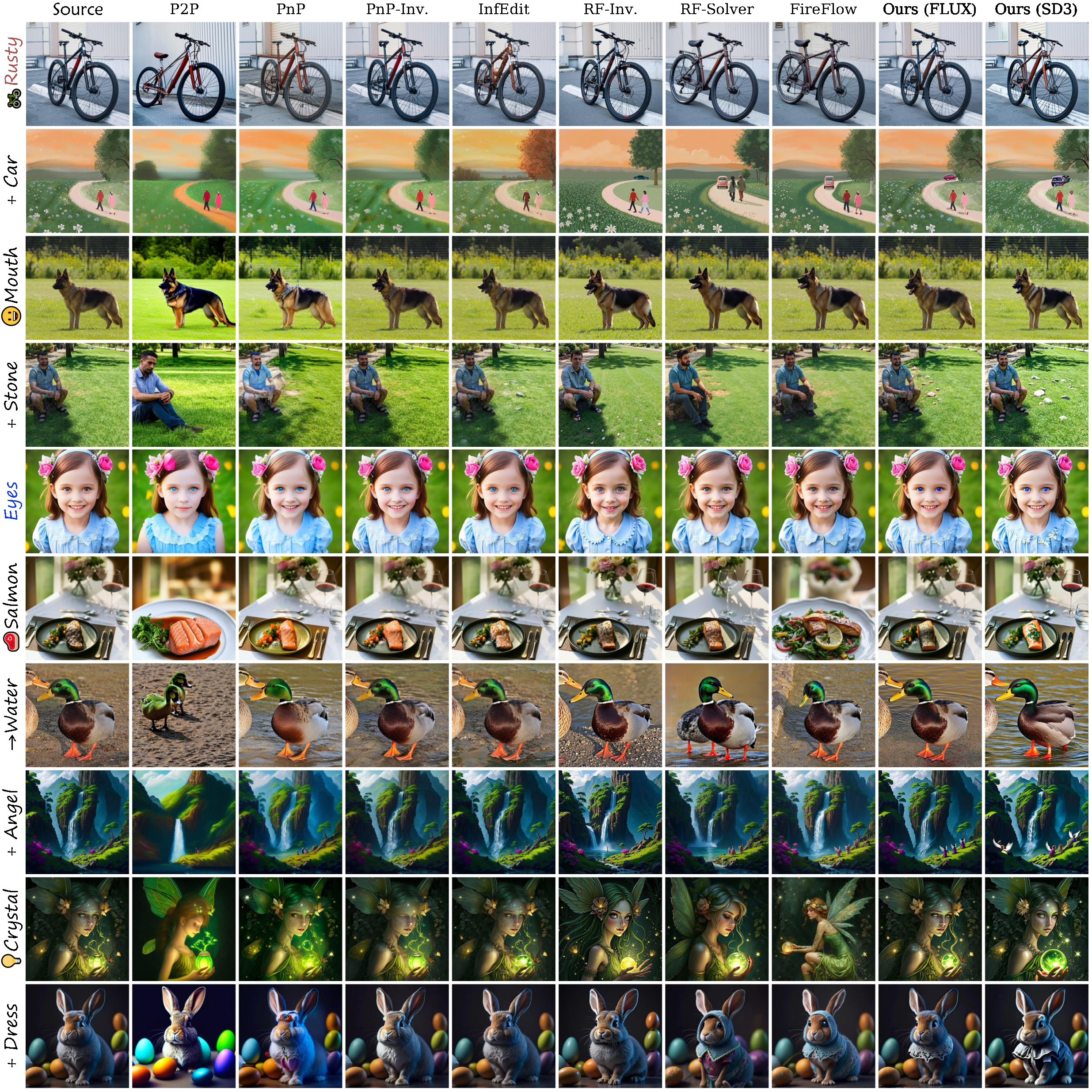

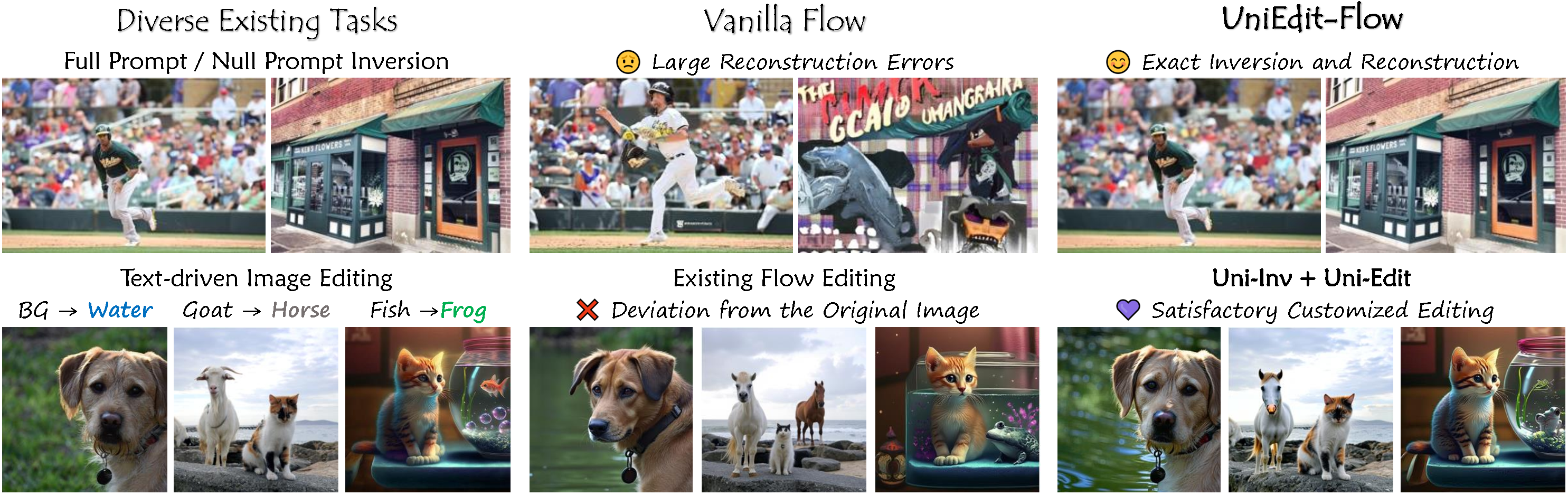

UniEdit-Flow for image inversion and editing. Our approach proposes a highly accurate and efficient, model-agnostic, training and tuning-free sampling strategy for flow models to tackle image inversion and editing problems. Cluttered scenes are difficult for inversion and reconstruction, leading to failure results on various methods. Our Uni-Inv achieves exact reconstruction even in such complex situations (1st line). Furthermore, existing flow editing always maintain undesirable affects, out region-aware sampling-based Uni-Edit showcases excellent performance for both editing and background preservation (2nd line).